Are we moving too fast with AI? While building an AI model, data scientists overlook risk, often leaving it unaddressed. To ensure dependability on the model, risk assessment becomes necessary to uncover potential harmful behaviors. Red teaming for LLMs offers a practical solution by stress testing AI models to find the specific weaknesses or preempt threats, and solve them before they can cause harm.

Everything from the training data, algorithm, and functions to model deployment needs to be error-free. What if the model bypasses safety restrictions, generates false responses, or hallucinates?

As AI becomes more powerful, the need for robustness, reliability, and efficiency in models grows. Ignoring these factors impedes the ethical and responsible development of AI. In this blog, we’ll explore how red teaming is applied to large language models (LLMs).

Do you know: Is LLaMA 3 HIPAA Compliant?

What is LLM Red Teaming?

LLM red teaming is the process of deliberately probing the model with complex prompts or unexpected scenarios. These tests performed on gen AI models with tricky prompts cause the LLM to respond in a manner that helps identify vulnerabilities, biases, and potential misuse. The goal is to uncover and address weaknesses to evaluate the model’s robustness, ethical compliance, and safety before it is widely deployed.

AI projects focusing solely on AI without adequate consideration for the potential negative consequences perpetuate bias in society. The process of red teaming involves simulating attacks to identify weaknesses and vulnerabilities in AI models. Red team your LLMs to protect against potential malicious users and harmful behavior and safeguard your company’s reputation from security and compliance risks.

What are the vulnerabilities in LLM?

An attempt to do red teaming for LLM is a preventive measure to check the model vulnerabilities. What are they? Let’s know here.

- May inherit biases: When the data LLM trained on is unrepresentative, it will give unfair outcomes that perpetuate negativity or existing stereotypes.

- Misuse of LLM: Generative AI models like LLM could be exploited for harmful purposes, such as spreading misinformation or enabling fraud. Through red teaming, an AI model becomes safe and reliable for deployment.

- Model Extraction: Hackers may try to steal functionality from a given LLM through repeated queries to the model. This needs to be corrected to meet safety standards as per industry regulations.

- Corrupt Data: Entering malicious data into a training dataset leads to biased or inaccurate model behavior that is susceptible to adversarial attacks.

- Lack of LLM validation: Proper sanitization of LLM responses eliminates vulnerabilities in the form of executing malicious code or systems compromise.

These are only a few examples of real-world vulnerabilities that may affect LLMs. However, as the AI field continues to advance, new and unpredictable vulnerabilities will emerge while underlining the need for new research and development in AI security assessment.

How is LLM red teaming different from traditional security testing?

Traditional security testing mainly involves revealing vulnerabilities in the IT infrastructure networks, systems, and applications, as well as simulated attack scenarios, like hacking or a data breach. LLM red teaming advances this approach. It targets those challenges unique to AI systems by focusing on these AI-specific weaknesses:

- AI-specific vulnerabilities: This includes targeting adversarial attacks (manipulating inputs to make the model mistakenly believe), biased exploitation, and privacy violations.

- Emphasis on emergent behavior: LLMs are complex and can sometimes exhibit unexpected behaviors. Red teaming seeks to identify such behaviors and assess their risks.

- Human-in-the-loop: In LLM red teaming, human involvement is fundamental to reviewing or assessing the responses from the model in terms of ethical implications and their effects on users.

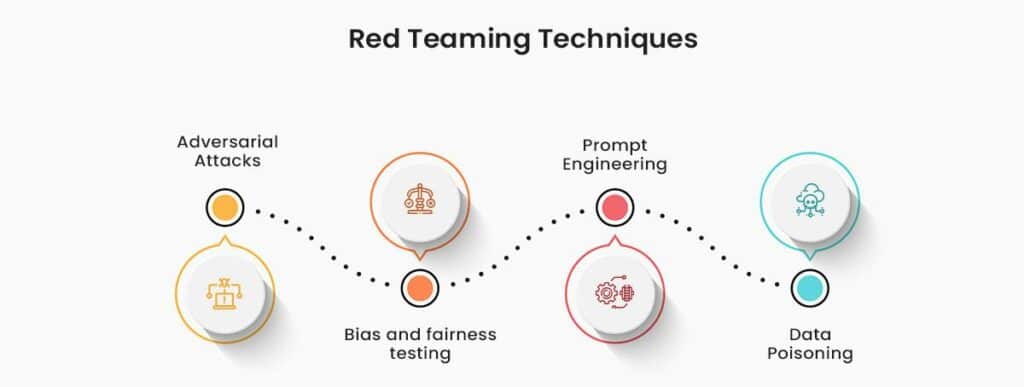

Exploring the Techniques of Red Teaming LLM

To put it simply, red teams would pretend to be enemies of LLM testing their behavior or outcomes. The techniques are discussed below:

1. Adversarial attacks

Adversarial attacks are inputs so designed that they confuse AI to make completely ridiculous mistakes, say something the model shouldn’t have, and reveal information the AI model is supposed to keep.

It includes adversarial inputs designed to manipulate the model into an incorrect outcome or reveal sensitive information.

Red-teaming large language models means tricking the AI into revealing its weaknesses. The goal here is to engineer prompts so sly that the model responds with something objectionable, setting traps for it to fall into adversarial attacks!

2. Bias and fairness testing

LLMs trained on vast amounts of text datasets are very good at generating realistic text. However, these models often show undesirable patterns revealing personal information (such as social security numbers) and perpetuating bias. This calls for fairness testing to identify hatefulness or toxic content.

For instance, earlier versions of GPT3 were known to exhibit sexist responses. The moment the red team discovers such undesired effects in the operation of an LLM, one can design counter-strategies that lead it away from them. Such is the case with GeDi, analyzing the model output for biases such as gender, race, religion, etc.

3. Prompt engineering

Prompt engineering is a method of designing effective jailbreak prompts. Red-teaming prompts are like regular, natural language prompts. Generating high-quality attack prompts can be both manual and automatic.

This explores the impact of different prompts on model behavior, including eliciting harmful or biased responses. Given that the prompt contains topics or phrases that lead to offensive output generations and the classifier signifies the prompt would lead to potentially offensive responses. That would, however, be extremely restrictive and result in an often biased model. Thus, there is a conflict between the model’s helpfulness (by following instructions) and being harmless (or at least less likely to enable harm).

4. Data poisoning

Language models are unpredictable. They’re not explicitly trained to do harmful things, but as they grow more powerful, they can develop surprising and unexpected abilities. Since we can’t predict exactly what they’ll do, the only way to test them is by creating every possible scenario where things could go wrong. It’s like stress-testing the model in a million different ways to see where it cracks.

Data poisoning for language models means identifying corrupted parts of training data with examples to observe how the model reacts. Does it start behaving oddly? Does it generate harmful or weird text? That’s what the red team tries to find out!

Ultimately, the safety of the model’s dependability rests entirely on how clever and thorough red-teaming is. The stronger and more creative the testing process is, the safer the models will be!

Who performs red teaming on LLMs and what skills do they need?

One common approach is role-play attacks, where testers simulate real-world scenarios to uncover potential risks. Domain-specific knowledge in healthcare or finance is very important for identifying unique ethical concerns and vulnerabilities associated with these fields.

Reinforcement Learning with Human Feedback, or RLHF also helps in red-teaming LLM. It is a process where humans oversee and judge the model’s responses. This is especially true when fine-tuning models to correct model alignment issues. Model alignment refers to making sure the model’s responses are ethically right according to human values. So, the insights and feedback during red-teaming exercises reveal areas of correction, errors, and improvement required for the model in future performances.

Can red teaming completely eliminate risks associated with LLMs?

No. Red teaming can’t eliminate all risks associated with LLMs entirely, but it anticipates an AI model’s shortcomings. Still, red teaming is important because by knowing where the model is lacking, the data scientists can work towards removing those threats or vulnerabilities by enhancing model capabilities and addressing potential issues before the model becomes irrelevant.

Most importantly, red teaming makes models more resilient to attacks by testing through adversarial inputs. Adversarial prompting refers to the crafting of inputs to trick or manipulate a language model into producing unintended, harmful, or incorrect outputs.

For LLM Red teaming, a qualified group of security assessment specialists is required while testing models. These services need specialist teams with a range of competencies that aggressively address dubious model responses. They assess the model under adversarial scenarios to identify blind spots or gaps via multiple rounds of testing.

Conclusion

The secret to developing AI responsibly is LLM red teaming. If red teaming is not incorporated into AI development, projects would have serious security vulnerabilities that could cause economic loss and societal harm. Nevertheless, risk identification and mitigation enhance system security and user safety. These risks have ethical implications for society, individuals, and the community. So outsource the red teaming of LLM and make your model risk-free and error-free.

Additionally, red teaming promotes the public’s trust in AI by identifying and combating biases in AI models to make them more equitable. By demonstrating a dedication to openness and responsibility in AI development, red teaming builds trust because it improves the efficiency and resilience of AI systems.